- What is DeepSeek-R1?

- Key Features of DeepSeek-R1:

- Why DeepSeek-R1 is Important for Local AI Development

- Data Privacy

- Cost Savings

- Customization

- Faster Response Times

- Offline Access

- Scalability

- Are you looking to integrate AI into your business?

- How to Run DeepSeek-R1 Locally (Step-by-Step Guide)

- Step 1: Install Ollama DeepSeek Radeon

- Step 2: Download DeepSeek-R1

- Step 3: Start the Ollama DeepSeek Radeon Server

- Step 4: Run DeepSeek-R1

- Step 5: Optimize for Performance

- Step 6: Integrate DeepSeek-R1 Into Your Workflow

- Comparison with Other Open-Source LLMs

- Challenges & Limitations

- Wrapping Up!

- FAQs

- Is DeepSeek-R1 better than GPT-4?

- Can I fine-tune DeepSeek-R1?

- Does DeepSeek-R1 support commercial use?

Who knew even AI’s job would be in danger? Well, it seems ChatGPT has met a challenger, and this one isn’t just making waves—it’s taking over the meme market too. Jokes aside, DeepSeek-R1 has entered the AI arena, and it’s changing the game for developers. According to reports, the DeepSeek-R1 is 90–95% less expensive than the o1.

Unlike cloud-based models, DeepSeek-R1 is built for local execution, meaning users can run it directly on their machines without relying on external servers. With a focus on reasoning, coding, and math, it offers open-source flexibility and strong performance.

Let’s break down what makes DeepSeek-R1 stand out in our DeepSeek R1 Blog.

What is DeepSeek-R1?

DeepSeek-R1 is an open-source AI model designed to run locally, meaning you can use it without sending data to external servers. This AI model competes with top-tier systems in reasoning, mathematics, and coding while maintaining privacy and security. Unlike many proprietary models that require internet access, DeepSeek-R1 allows developers to have full control over data, making it a solid option for businesses with strict compliance and security requirements. Let’s learn more about it in this DeepSeek R1 Blog.

Key Features of DeepSeek-R1:

- Local Execution – Run AI-powered tasks without depending on the cloud.

- MIT Licensed – Fully open-source for developers to tweak, integrate, and improve.

- Advanced Quantization – Reduces hardware requirements while maintaining performance.

- Top-Tier Reasoning – Competes with leading AI models in coding, logic, and problem-solving.

Why DeepSeek-R1 is Important for Local AI Development

Let’s get real—AI is awesome, but it’s not without its challenges. Most AI models require cloud-based APIs, which can be expensive, slow, and raise privacy concerns. DeepSeek-R1 flips the script by bringing AI to your local machine.

And if you’re looking for ways to seamlessly integrate AI into your systems, the DeepSeek API can help streamline that process, enabling you to add local AI capabilities without overhauling your entire setup.

Here’s why that’s a big deal:

Data Privacy

Your data stays on your device. No third-party servers, no risk of leaks. This is especially necessary for industries like healthcare, finance, and legal services, where sensitive information must be protected. With DeepSeek R1 Open-Source, you don’t have to worry about your data being sent to external servers or stored in third-party databases. This ensures compliance with strict data protection regulations like GDPR or HIPAA, giving businesses peace of mind.

For developers working on proprietary projects or handling confidential client data, local execution eliminates the risk of data breaches or unauthorized access. It’s a game-changer for anyone who prioritizes security and privacy.

Cost Savings

Skip the recurring cloud costs and run AI on hardware you already own. Cloud-based AI services often come with subscription fees, usage-based pricing, or hidden costs for high-volume usage. Over time, these costs can add up, especially for startups or small businesses with limited budgets.DeepSeek-R1 lets you keep those pennies in your pocket.

Remember what Benjamin Franklin said: “A penny saved is a penny earned.”

DeepSeek-R1 allows you to bypass these costs entirely. By running the model locally, you can leverage your existing hardware, whether it’s a high-end GPU or a powerful CPU. This not only reduces operational expenses but also makes AI for developers more accessible to individuals and smaller teams who might not have the resources for expensive cloud subscriptions.

Customization

Tweak the model to fit your specific needs without jumping through hoops. One of the noteworthy advantages of open source AI tools like DeepSeek-R1 is the ability to customize them. Unlike proprietary AI systems that lock you into predefined functionalities, DeepSeek-R1 gives you full control.

Developers can fine-tune the model to suit their unique requirements, whether it’s optimizing it for a specific programming language, tailoring it for a niche industry, or integrating it into a custom workflow. This level of flexibility is invaluable for businesses looking to create tailored AI solutions that align perfectly with their goals.

Additionally, the open-source nature of DeepSeek-R1 encourages collaboration and innovation. Developers can share improvements, build on each other’s work, and contribute to the model’s evolution, creating a vibrant ecosystem of tools and applications.

Faster Response Times

Local execution means faster responses compared to cloud-based alternatives. When you run an AI model locally, you eliminate the latency associated with sending data to and from remote servers. This is particularly beneficial for real-time applications, such as coding assistance, conversational AI, or edge computing.

For example, developers working on time-sensitive projects can get instant feedback and suggestions without waiting for cloud-based APIs to process their requests. This not only boosts productivity but also enhances the overall user experience.

Offline Access

Work in remote areas or places with spotty internet? No problem. Many developers and businesses operate in environments where internet connectivity is unreliable or unavailable. For example, field researchers, remote engineers, or even developers working in rural areas often face challenges with cloud-based AI tools.

As Tim Berners-Lee, the inventor of the World Wide Web, put it, “The web doesn’t just connect machines, it connects people.” But sometimes, those connections are down!

DeepSeek R1 Open-Source solves this problem by enabling offline access. You can run the model on your local machine without needing an internet connection. This ensures uninterrupted productivity, whether you’re coding, analyzing data, or solving complex problems in real-time.

Scalability

DeepSeek-R1’s local execution capabilities make it highly scalable. Whether you’re running it on a single laptop or deploying it across multiple devices in a distributed environment, the model adapts seamlessly. This scalability is ideal for businesses looking to integrate AI into their operations without overhauling their infrastructure.

For instance, a small startup can start with a single machine and gradually scale up as their needs grow. Similarly, enterprises can deploy DeepSeek-R1 across various departments or locations, ensuring consistent performance and accessibility.

Are you looking to integrate AI into your business?

How to Run DeepSeek-R1 Locally (Step-by-Step Guide)

DeepSeek-R1 can be set up on consumer-grade GPUs and CPUs using tools like Ollama DeepSeek Radeon. Follow this step-by-step guide to get it running on your machine.

Step 1: Install Ollama DeepSeek Radeon

Ollama DeepSeek Radeon is an AI runtime that allows models like DeepSeek-R1 to run locally. It provides an easy way to execute models without needing complex setups.

MacOS users: Install Ollama DeepSeek Radeon by running:

brew install ollama

Windows & Linux users: Visit the Ollama website to download the appropriate version and follow DeepSeek-R1 Installation instructions.

Step 2: Download DeepSeek-R1

Once Ollama DeepSeek Radeon is installed, pull the DeepSeek-R1 model to your system:

ollama pull deepseek-r1

For users with limited hardware resources, a smaller version of the model can be downloaded using:

ollama pull deepseek-r1:1.5b

This helps optimize performance while maintaining functionality.

Step 3: Start the Ollama DeepSeek Radeon Server

To enable local execution, start the Ollama DeepSeek Radeon server by running:

ollama serve

This process prepares the system for running DeepSeek-R1 smoothly.

Step 4: Run DeepSeek-R1

With the server active, launch the model using:

ollama run deepseek-r1

Once executed, the model is ready to process requests, providing AI-powered assistance for various tasks like coding, research, and content generation.

Step 5: Optimize for Performance

For improved performance on lower-end hardware:

- Use a quantized model to reduce memory usage.

- Allocate more RAM or GPU power if available.

- Adjust inference settings in Ollama’s configuration files.

Step 6: Integrate DeepSeek-R1 Into Your Workflow

Now that DeepSeek-R1 is running, developers can use it for:

- AI-powered coding: Assisting with debugging and code suggestions.

- Data security: Processing sensitive information without cloud dependency.

- Research and analysis: Running AI experiments without external limitations.

- Real-time AI interactions: Faster response times compared to cloud-based alternatives.

Comparison with Other Open-Source LLMs

How does DeepSeek-R1 stack up against other open-source LLMs like LLaMA or Falcon?

|

Feature |

DeepSeek-R1 | LLaMA |

Falcon |

|

Local Execution |

Yes | Limited |

Limited |

|

Quantization Support |

Advanced | Basic |

Basic |

|

Open-Source License |

MIT | Custom |

Apache 2.0 |

|

Performance |

High | Moderate |

Moderate |

DeepSeek-R1’s focus on local execution and quantization gives it a clear edge for developers who value privacy and efficiency.

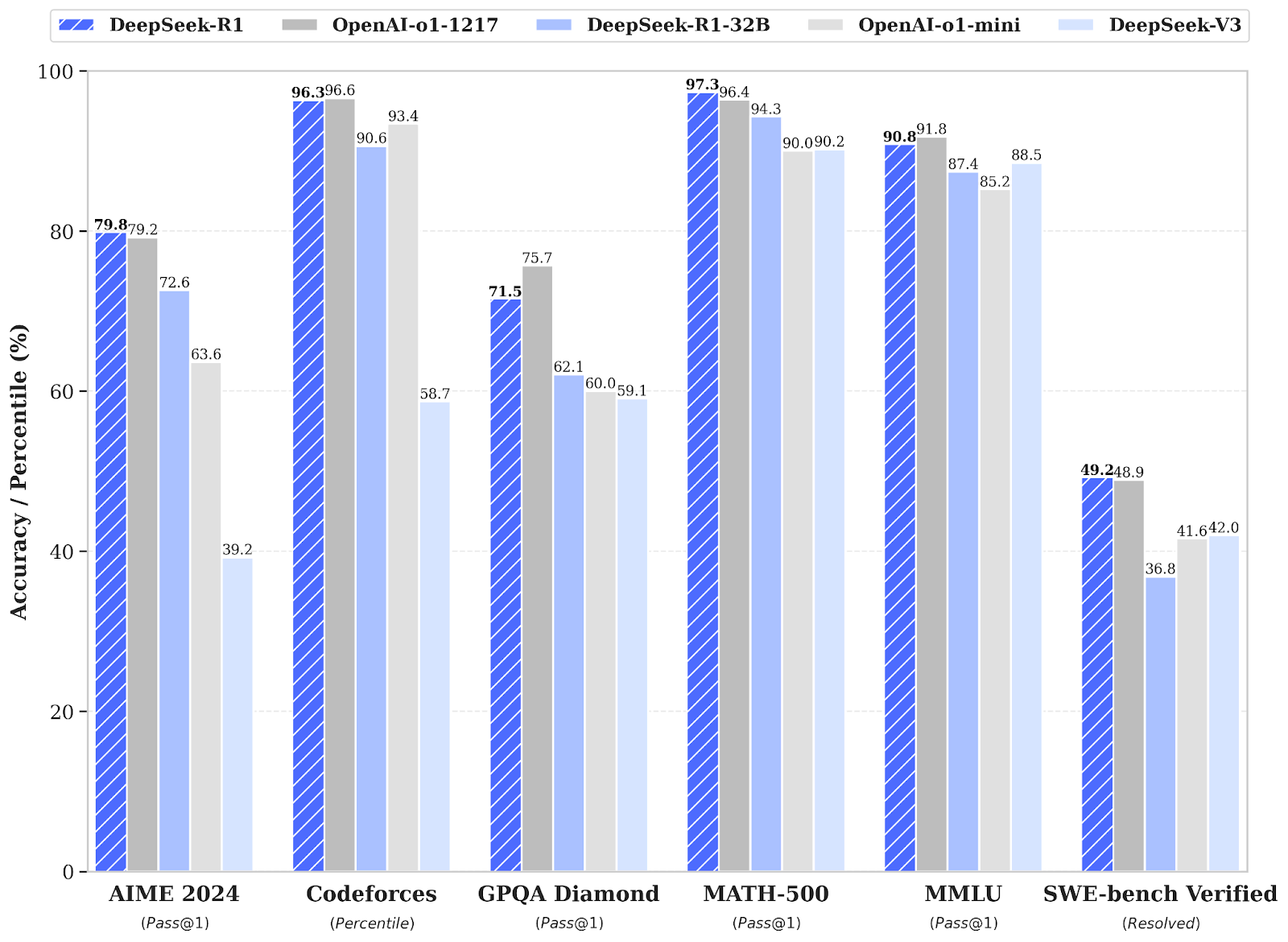

DeepSeek-R1 achieved 97.3% on the MATH-500 and 79.8% on the AIME 2024 mathematics tests. It also outperformed 96.3% of human programmers with a Codeforces rating of 2,029. On these standards, however, o1-1217 received scores of 79.2%, 96.4%, and 96.6%, respectively.

Additionally, it showed high general knowledge, with accuracy on MMLU with 90.8%.

Performance of DeepSeek-R1 vs OpenAI o1 and o1-mini (Image Source: https://venturebeat.com/)

Challenges & Limitations

While DeepSeek-R1 is impressive, it has a few downsides:

- Hardware Dependency – Requires a capable GPU or CPU to run efficiently.

- Limited Pre-Trained Knowledge – May not be as updated as GPT-4 or Claude 3.

- Community-Driven Updates – Since it’s open-source, improvements rely on developer contributions.

Wrapping Up!

DeepSeek-R1 is a major step forward for local AI development, offering privacy, control, and cost-effective AI solutions. Developers and businesses looking for a secure and flexible alternative to cloud-based models can follow this DeepSeek-R1 Tutorial.

FAQs

Is DeepSeek-R1 better than GPT-4?

It depends on the use case. DeepSeek-R1 is open-source and can run locally, giving you full control, while GPT-4 is cloud-based with superior knowledge breadth but requires an internet connection.

Can I fine-tune DeepSeek-R1?

Yes! The MIT license allows customization and fine-tuning to fit specific applications.

Does DeepSeek-R1 support commercial use?

Yes! You can use it for business applications without licensing restrictions.

About Author

Pankaj Sakariya - Delivery Manager

Pankaj is a results-driven professional with a track record of successfully managing high-impact projects. His ability to balance client expectations with operational excellence makes him an invaluable asset. Pankaj is committed to ensuring smooth delivery and exceeding client expectations, with a strong focus on quality and team collaboration.